Introduction#

Donald Knuth famously wrote that Premature Optimization is the root of all evil. I, for one, believe that all evil comes from spuriously failing builds. Nothing steals my confidence in a project as quickly as unstable builds alternating between green and red for no reason. This is a story about unstable builds and troubleshooting. More importantly, this story is written to thank all contributors to basic software infrastructure - the infrastructure we all use and take for granted.

Surprise in logs#

Upon logging into Azure Pipelines to review the logs of multiple failed builds, I mentally braced myself for a potentially arduous troubleshooting session. I suspected that a race condition was the culprit that caused non-deterministic outcomes. Therefore, I was surprised to discover the actual reasons for the recent build failures. They were all similar to this:

This was the most interesting part:

Could not transfer artifact org.apache.maven.plugins:maven-clean-plugin:pom:3.2.0 from/to central (https://repo.maven.apache.org/maven2): Connection reset -> [Help 1]

The error comes from the Apache Maven project, which we use to build QuestDB. Maven complained that it could not download the Maven Clean plugin from the Central repository. If this had just been a one-off error, I would have played it down as a network hiccup. However, we were getting the same error multiple times a day!

The investigation, part I#

I am no Maven expert, so I did what every developer would do when troubleshooting a rather complex piece of technology: I Googled it!

Eventually, I stumbled upon this issue.

It says that Azure Pipelines kill idle connections, which does not play nicely with the habit of Maven to pool and re-use HTTP connections. This is consistent with what I saw in builds logs: Maven started tests, which ran for 20+ minutes and passed successfully. Then Maven wanted to download the Clean Plugin to do something else, but the download attempt failed, which killed the whole build.

There was still one question left unanswered: Why did we start getting this error only recently? Well, I had an explanation for this, too: We kept adding tests, so they ran longer. Azure Pipelines killed connections because the total test duration exceeded the idle connection timeout. It all started to make sense.

I also found a Maven configuration property to restrict the Time-To-Live of connections in the pool. The Maven issue I found says:

"Azure users shall set the TTL to 240 seconds or less."

So I sent a Pull Request to set TTL to 30s, announced it in the company Slack, and felt good about myself :)

It took less than 30 minutes, and we got another failure:

The failure had the same cause as before. The fix did not work. Back to square one.

The investigation, part II#

Googling blindly combined with a bit of logical reasoning failed to produce results. There was only one answer: WE NEED MOAR LOGS! Running Maven with verbose logs produced an exception with this beautiful stack trace:

It looks like a typical lengthy Java stack trace. I noticed that that there was

nothing about "Wagon". I knew Wagon was a

Maven component to download dependencies. Even my first fix attempt

configured a Wagon-related property:

-Dmaven.wagon.httpconnectionManager.ttlSeconds=30

So it was suspicious that I could not see anything Wagon-related in the exception stack trace. At this point, I resorted to the 3rd level of troubleshooting: Reading the source code! The Maven project consists of many components; for a non-expert, it's not simple to grasp. Eventually, I made an interesting discovery: Maven dependency resolver supports pluggable (network) transports. Digging deeper, I found 2 Transport implementations that can provide HTTP access:

- The Wagon implementation I knew about

- A new “Native” implementation

The Wagon implementation uses the Apache HTTP Client internally, and it configures the client with a retry handler. There are different retry strategies available. What is important is that the Wagon transport uses three retries by default:

The source code of the “Native” transport implementation is directly in the Maven Resolver project. Judging from the class and package names, it is clear that this is the transport I saw in the exception stack traces on the build server. It also uses the Apache HTTP Client internally but does not configure any retrying strategy!

It appears that Maven is failing quickly instead of attempting to retry when a connection is terminated because the Native transport protocol does not attempt to retry and immediately reports network errors. However, why did this issue appear only recently? I opened Maven 3.9 release notes once again. The paragraph “Potentially Breaking Core Changes” did not have anything suspicious. Luckily, I kept reading and saw this: “Switchable and alternative resolver transports included, with default switched to native transport.” Bingo! This must be it.

Here was my hypothesis: We invoked Maven via the Azure Pipelines Maven Task and we did not specify Maven version explicitly. Hence, it used the default version, which was recently upgraded to Maven 3.9. From the release notes, we learned that Maven 3.9 switched to the new Native transport that does not support retrying. It all started to make sense.

How to test this hypothesis? There were two options:

- Downgrade Maven to version pre-3.9

- Force Maven 3.9 to use the Wagon transport instead of the new Native transport

While browsing Maven source code, I stumbled upon a strategy for selecting transports:

I figured out that forcing the old Wagon transport was as simple as passing

-Dmaven.resolver.transport=wagon to my build command. I

added the flag

and waited eagerly for the build results. And it was a success! The first build

passed, then the second, third, and fourth. All builds were green! Such a relief.

Our precious builds were deterministic again. Problem Solved! Or not?

Contributing Back#

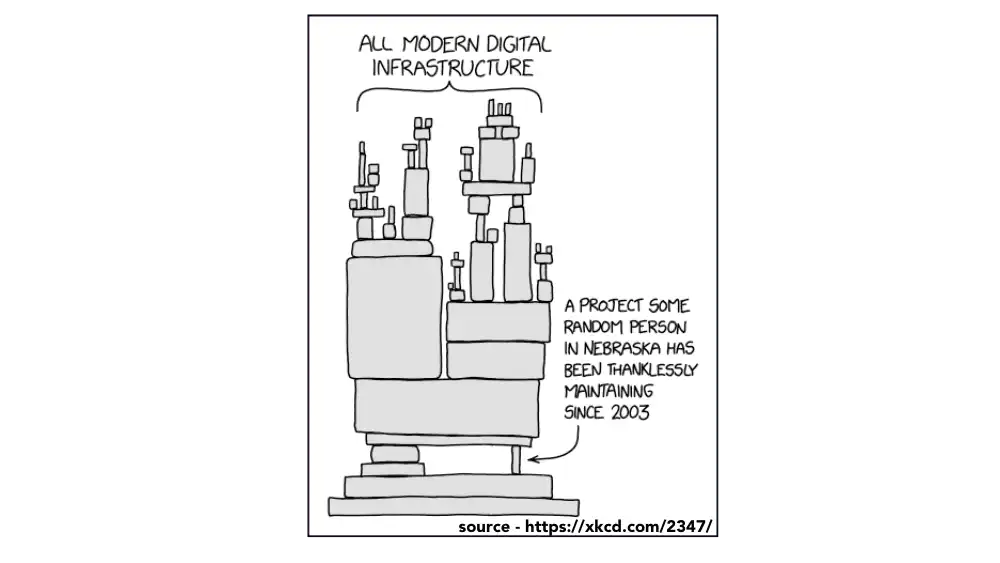

We all consume open source. Apache Maven itself is open source. It’s so ubiquitous that it is impossible to find a Java developer who has never used it. Maven is a basic software infrastructure that we all take for granted. It’s not backed by a large corporation or by a VC-funded startup. Volunteers maintain it. I felt the least I could do was to report my experience to the Maven team, so they could clarify whether it was an intentional behavior or a bug. I opened a ticket and wanted to move on.

The same evening, I saw one of the Maven maintainers create a new issue in the Maven Resolver project and link it to the Maven issue I created earlier. I took it as a confirmation that the behavior was not intentional and started to poke the Maven Resolver source code once again. Only this time with the intention to fix it.

It turned out that the fix was really easy. It was a matter of creating a retry handler and setting the desired retry count. That was exactly what the old Wagon plugin was doing. A few lines got changed. Then I was wondering how to test it. None of the existing Resolver tests exercises scenarios where a connection to a remote repository fails. The existing tests use a Jetty-based server mimicking a remote repository. So I figured out I could add a fault injection: A Jetty handler which force-closes a predefined number of connections. And then test if the resolver can still access the server. Again, a few lines of code:

This infrastructure allowed me to write tests similar to this:

Easy-peasy!

I sent the Pull Request and was ready to forget about it. Merging a PR to new projects can take a while. To my surprise, the maintainers reviewed the PR less than 1 hour after the submission and merged it the next morning. Wow! This is the way to attract new contributors! I admire all people maintaining projects like Maven. They do it in their free time, and I reckon they mostly hear from users when something is wrong and when users are upset. They deserve recognition for what they do. Tamas Cservenak and Slawomir Jaranowski helped me with the PR. If you are reading this: Thank you!

What is the moral of the story?

- Troubleshooting is fun.

- Read release notes carefully.

- Be grateful to the maintainers of basic infrastructure.

- Contributing back feels good!